Job DSL Part II

In the first part of this little series I was talking about some of the difficulties you have to tackle when dealing with microservices, and how the Job DSL Plugin can help you to automate the creation of Jenkins jobs. In today’s installment I will show you some of the benefits in maintenance. Also we will automate the job creation itself, and create some views.

Let’s recap what we got so far. We have created our own DSL to describe the microservices. Our build Groovy script iterates over the microservices, and creates a build job for each using the Job DSL. So what if we want to alter our existing jobs? Just give it a try: we’d like to have JUnit test reports in our jobs. All we have to do, is to extend our job DSL a little bit by adding a JUnit publisher:

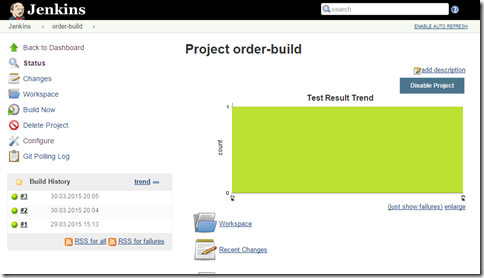

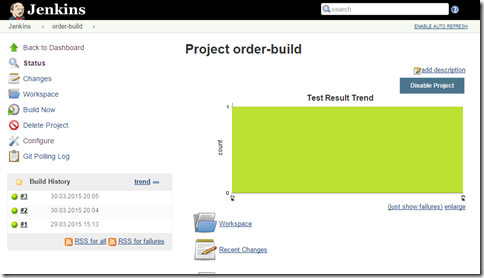

Run the seed job again. All existing jobs has been extended by the JUnit report. The great thing about the Job DSL is, that it alters only the config. The job’s history and all other data remains, just like you edited the job using the UI. So maintenance of all our jobs is a breeze using the Job DSL Note: Be aware that the report does not show until you run the tests twice.

We now check it into a repository so we can reference it in our seed build (you don’t have to this, I already prepared that for you in the master branch of our jobdsl-sample repository at GitHub).

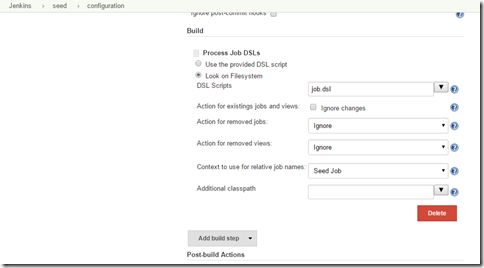

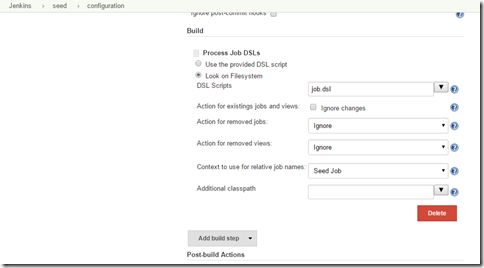

Finally we have to adapt our seed build to watch and check out the jobdsl-sample repository…

… and use the checked out job.dsl instead of the hardcoded one:

That’s it. Now the seed job polls for changes on our sample respository, so if somebody adds a new microservice or alters our job.dsl, all jobs will be (re-)created automatically without any manual intervention.

Note: We could have put both the microservice.dsl and job.dsl in one file, as we had it in the first place. But now you can use your microservice.dsl independently of the job.dsl to automate all kinds of stuff. In our current project we use it e.g. for deployment and tooling like monitoring etc.

Note: in order to use the build pipeline, you have to install the Build Pipeline Plugin.

We set up a new DSL file called

Now change your seed job to use use the

The Build Pipeline Plugin comes with its own view, the build pipeline view. If you set up this view and provide a build job, the view will render all cascading jobs as a pipeline. So now we are gonna generate a pipeline view for each microservice. As before I already provided the DSL for you in the repository.

Not that complicated, eh? We just iterate over the microservices, and create a build pipeline view for each. All we got to specify, is the name of the first job in the pipeline. The others are found by following the downstream cascade. Ok, so configure your seed job to use the

If you select one view, you will see the state of all steps in the pipeline:

Having a single view for each microservice will soon become confusing, but as described in Brining in the herd, nested views will help you by aggregating information. So we are gonna group all our pipeline views together by nesting them in one view. We our going to generate a nested view containing the build pipeline views of all our microservices. i will list only the difference to the previous example. The complete script is provided for you on GitHub ;-)

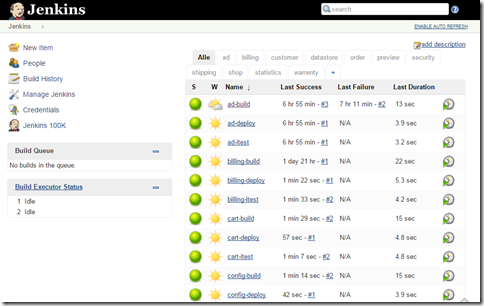

So what do we do here? We create a nested view with the columns status and weather, and create a view of type BuildPipelineView for each microservice. A difference you might notice compared to our previous example, is that we are setting some additional properties in the build pipeline view. The point is how we create the view. Before we used the dedicated DSL for the build pipeline view which sets some property values by default. Here we are using the generic view, so in order to get the same result, we have to set these values explicitly. Enough of the big words, configure your seed job to use the

Note: You need to install the Nested View Plugin into your Jenkins in order to run this example.

Cool. This gives us a nice overview to the state of all our build pipelines. And you can still watch every single pipeline by selecting one of the nested views:

So what have we got so far? Instead of using a hardcoded DSL in the job, we moved it to a dedicated repository. Our seed job watches this repository, and automatically runs on any change. Means if we alter our job configuration or add a new microservices, the corresponding build jobs are automatically (re-)created. We also created some views to get more insight into the health of our build system.

That’s it for today. In the next and last installment I’d like to give you some hints on how to dig deeper into the Job DSL: Where you will find some more information, where to look if the documentation is missing something, faster turnaround using the playground, and some pitfalls I’ve already fallen into.

Regards

Ralf

Let’s recap what we got so far. We have created our own DSL to describe the microservices. Our build Groovy script iterates over the microservices, and creates a build job for each using the Job DSL. So what if we want to alter our existing jobs? Just give it a try: we’d like to have JUnit test reports in our jobs. All we have to do, is to extend our job DSL a little bit by adding a JUnit publisher:

freeStyleJob("${name}-build") {

...

steps {

maven {

mavenInstallation('3.1.1')

goals('clean install')

}

}

publishers {

archiveJunit('/target/surefire-reports/*.xml')

}

}

Run the seed job again. All existing jobs has been extended by the JUnit report. The great thing about the Job DSL is, that it alters only the config. The job’s history and all other data remains, just like you edited the job using the UI. So maintenance of all our jobs is a breeze using the Job DSL Note: Be aware that the report does not show until you run the tests twice.

Automating the job generation itself

Wouldn’t it be cool, if the jobs would be automatically re-generated, if we change our job description or add another microservice? Quite easy. Currently our microservice- and job-DSL are hardcoded into the seed job. But we can move that into a (separate) repository, watch and check it out in our seed job, and use this instead of the hardcoded DSL. So at first we put our microservice- and job-DSL into two files calledmicroservice.dsl and job.dsl.microservice.dsl:microservices {

ad {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'ad'

}

billing {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'billing'

}

cart {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'cart'

}

config {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'config'

}

controlling {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'controlling'

}

customer {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'customer'

}

datastore {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'datastore'

}

help {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'help'

}

logon {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'logon'

}

order {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'order'

}

preview {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'preview'

}

security {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'security'

}

shipping {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'shipping'

}

shop {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'shop'

}

statistics {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'statistics'

}

warrenty {

url = 'https://github.com/ralfstuckert/jobdsl-sample.git'

branch = 'warrenty'

}

}

job.dsldef slurper = new ConfigSlurper()

// fix classloader problem using ConfigSlurper in job dsl

slurper.classLoader = this.class.classLoader

def config = slurper.parse(readFileFromWorkspace('microservices.dsl'))

// create job for every microservice

config.microservices.each { name, data ->

createBuildJob(name,data)

}

def createBuildJob(name,data) {

freeStyleJob("${name}-build") {

scm {

git {

remote {

url(data.url)

}

branch(data.branch)

createTag(false)

}

}

triggers {

scm('H/15 * * * *')

}

steps {

maven {

mavenInstallation('3.1.1')

goals('clean install')

}

}

publishers {

archiveJunit('/target/surefire-reports/*.xml')

}

}

}

We now check it into a repository so we can reference it in our seed build (you don’t have to this, I already prepared that for you in the master branch of our jobdsl-sample repository at GitHub).

Finally we have to adapt our seed build to watch and check out the jobdsl-sample repository…

… and use the checked out job.dsl instead of the hardcoded one:

That’s it. Now the seed job polls for changes on our sample respository, so if somebody adds a new microservice or alters our job.dsl, all jobs will be (re-)created automatically without any manual intervention.

Note: We could have put both the microservice.dsl and job.dsl in one file, as we had it in the first place. But now you can use your microservice.dsl independently of the job.dsl to automate all kinds of stuff. In our current project we use it e.g. for deployment and tooling like monitoring etc.

Creating Views

In the post Brining in the herd I described how helpful views can be to get a overview to all your jobs, or even aggregate information. The Job DSL allows you to generate views just like jobs, so it’s a perfect fit for that need. In order to have some examples to play with, we will increase our set of jobs by adding an integration-test and deploy-job for every microservice. These jobs don’t do anything at all (means: they are worthless), we just use them to set up a build pipeline.Note: in order to use the build pipeline, you have to install the Build Pipeline Plugin.

We set up a new DSL file called

pipeline.dsl and push it to our sample repository (again you don’t have to do this, it’s already there). We add two additional jobs per microservice, and set up a downstream cascade. Means: at the end of each build (-pipeline-step) the next one is triggered:pipeline.dsldef slurper = new ConfigSlurper()

// fix classloader problem using ConfigSlurper in job dsl

slurper.classLoader = this.class.classLoader

def config = slurper.parse(readFileFromWorkspace('microservices.dsl'))

// create job for every microservice

config.microservices.each { name, data ->

createBuildJob(name,data)

createITestJob(name,data)

createDeployJob(name,data)

}

def createBuildJob(name,data) {

freeStyleJob("${name}-build") {

scm {

git {

remote {

url(data.url)

}

branch(data.branch)

createTag(false)

}

}

triggers {

scm('H/15 * * * *')

}

steps {

maven {

mavenInstallation('3.1.1')

goals('clean install')

}

}

publishers {

archiveJunit('/target/surefire-reports/*.xml')

downstream("${name}-itest", 'SUCCESS')

}

}

}

def createITestJob(name,data) {

freeStyleJob("${name}-itest") {

publishers {

downstream("${name}-deploy", 'SUCCESS')

}

}

}

def createDeployJob(name,data) {

freeStyleJob("${name}-deploy") {}

}

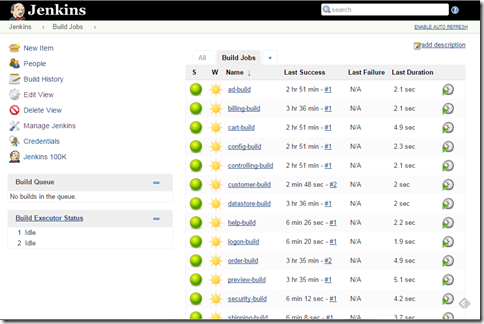

Now change your seed job to use use the

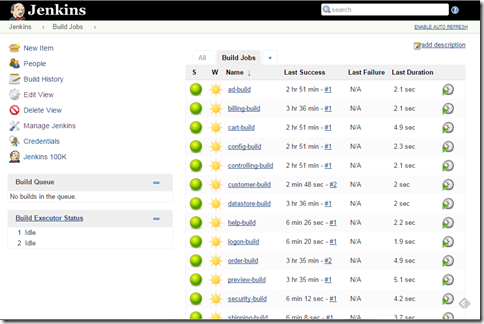

pipeline.dsl instead of the job.dsl and let it run. Now we have three jobs for each microservice cascaded as a build pipeline.

The Build Pipeline Plugin comes with its own view, the build pipeline view. If you set up this view and provide a build job, the view will render all cascading jobs as a pipeline. So now we are gonna generate a pipeline view for each microservice. As before I already provided the DSL for you in the repository.

pipeline-view.dsl...

// create build pipeline view for every service

config.microservices.each { name, data ->

buildPipelineView(name) {

selectedJob("${name}-build")

}

}

...

Not that complicated, eh? We just iterate over the microservices, and create a build pipeline view for each. All we got to specify, is the name of the first job in the pipeline. The others are found by following the downstream cascade. Ok, so configure your seed job to use the

pipeline-view.dsl and let it run. Now we have created a pipeline view for every microservice:

If you select one view, you will see the state of all steps in the pipeline:

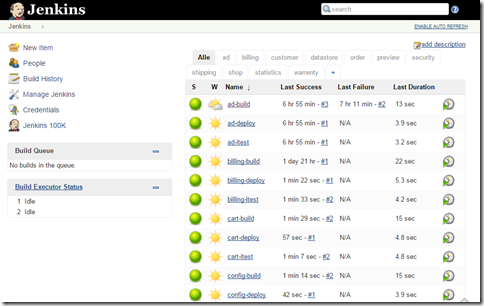

Having a single view for each microservice will soon become confusing, but as described in Brining in the herd, nested views will help you by aggregating information. So we are gonna group all our pipeline views together by nesting them in one view. We our going to generate a nested view containing the build pipeline views of all our microservices. i will list only the difference to the previous example. The complete script is provided for you on GitHub ;-)

pipeline-nested-view.dsl...

// create nested build pipeline view

nestedView('Build Pipeline') {

description('Shows the service build pipelines')

columns {

status()

weather()

}

views {

config.microservices.each { name,data ->

println "creating build pipeline subview for ${name}"

buildPipelineView("${name}") {

selectedJob("${name}-build")

triggerOnlyLatestJob(true)

alwaysAllowManualTrigger(true)

showPipelineParameters(true)

showPipelineParametersInHeaders(true)

showPipelineDefinitionHeader(true)

startsWithParameters(true)

}

}

}

}

...

So what do we do here? We create a nested view with the columns status and weather, and create a view of type BuildPipelineView for each microservice. A difference you might notice compared to our previous example, is that we are setting some additional properties in the build pipeline view. The point is how we create the view. Before we used the dedicated DSL for the build pipeline view which sets some property values by default. Here we are using the generic view, so in order to get the same result, we have to set these values explicitly. Enough of the big words, configure your seed job to use the

pipeline-nested-view.dsl, and let it run.Note: You need to install the Nested View Plugin into your Jenkins in order to run this example.

Cool. This gives us a nice overview to the state of all our build pipelines. And you can still watch every single pipeline by selecting one of the nested views:

So what have we got so far? Instead of using a hardcoded DSL in the job, we moved it to a dedicated repository. Our seed job watches this repository, and automatically runs on any change. Means if we alter our job configuration or add a new microservices, the corresponding build jobs are automatically (re-)created. We also created some views to get more insight into the health of our build system.

That’s it for today. In the next and last installment I’d like to give you some hints on how to dig deeper into the Job DSL: Where you will find some more information, where to look if the documentation is missing something, faster turnaround using the playground, and some pitfalls I’ve already fallen into.

Regards

Ralf

I don't know that there are any short cuts to doing a good job.Update 08/17/2015: Added fixes by rhinoceros in order to adapt to Job DSL API changes

Sandra Day O'Connor

nice explanation

ReplyDeletenice explanation

ReplyDeleteA most excellent resource!

ReplyDelete